Psychological Foundations of Language, Personality, and Associated Intelligences

Ten fingers and “ten toes—that’s all anybody used to care about” (Gardner et al., 1972). Not anymore. Remarkable is the way in which we came into the world, at least by Western iconography, which is more perceptively abstract in the least. But, at the fore of this biologic emergence is a child’s ‘first contact,’ primarily by Language. What about Personality? Or, you might also be wondering: Which comes first—Language or Personality?

Complex Cognition

We clinicians—and, more importantly, parents—are seemingly ‘struck and stuck’ in moments of pure elation within our child’s first speech productions. At the same time, our proceeding apperceptions of them and of self, even subconsciously, typically center around the evaluation of the child’s physiological and inherent cognitive capacitance for further productions that may be manifested in their speech. (Dualistic ‘nature vs. nurture’ paradigms definitely underlie the crux of this blog, but will not be explicated in granular detail because this topic easily could fill a couple of blog posts on its own.)

Spellbound by our child’s pubescent articulations, we seek, but cannot completely know, the maximum depths of the little one’s organic cognitions, which can be inhibiting and can impinge upon our patient curiosities. We can even feel intimidated when realizing that our young one’s (child and child-patient) cognitions are more complex and now likely unbeknownst to all. Not only that. By edgily thin margins, their intellectual introspections retrospectively may surpass the comprehensions and productions of our own youth (Jabbarov, 2020; Karademir & Gorgoz, 2019).

In the early days of psychology—those of Freud, Jung, Adler, Ferenczi, and Klein—a kind of psych testing involved all sorts of methods from positive and negative reinforcement and differential reward-payoff systems to associated priming tests to stimulate behavior. All claimed representatively relevant findings about their ability to capture and accurately measure patients’ innermost moods and habit-based tendencies, in model organisms from bumble bees to monkeys to humans, through ‘non-invasive’ methods (for example, electroshock therapy for treatment and elimination of schizophrenia and phobic compulsory disorders) (Li & Jeong, 2020; Li et al., 2020; Liu et al., 2020).

Most of us know or have heard a horror story or two about how invasive, if not directly damaging and thus outlawed, these therapeutically deleterious medically psychological tests were. Besides their barbaric methods, they were doubly non-evidence-based. That is how we and the psychologic community of science eventually came to see them as being incredulously arcane, and we abandoned them in favor of neuropsychology being ‘the light’ and a cavalry of sorts. The burgeoning of neuropsychological testing does well to incorporate quantitatively non-invasive measures and to consider qualitative aspects of the human spirit (Li, 2020; Li & Jeong, 2020).

Let’s look closer and get a basis for the beginnings of language and its processing/maturation, and then we’ll likewise discuss it in the context of personality. Similarly, we’ll discuss the genetic/epigenetic (nature/nurture) biochemistry, physiologic, and then psychologic landscapes/territories of language as language feeds into personality. Last, we’ll unravel some of the kaleidoscopically mutable interweavings of personality/temperament. We’ll also unravel some of its more-important psychological factors that not only make us human (choice/free will, decision making, pre-thought vs. involuntary habituation), but also ultimately contribute to whom we are now and prospectively to whom we strive to be.

Beginnings of Language: Capability vs. Ability

Universally finite and presumably-known definitions exist to differentiate and distinguish Language’s developmental capacity and ability from one another. First, though, let’s render how they’re related and how they’re different. Let’s also unveil the mythic ways in which Language capacitance and ability are downright misconstrued, thus misused.

Which Comes First?

Even before Emotion and Personality, newborns’ aptitude for Language starts as early as the auditory cortex begins to take shape and underlying neural networks begin to fire during development in the womb; this critical period occurs around the tail end of the first trimester and explodes developmentally upon birth. I will elaborate on Emotion and Personality shortly.

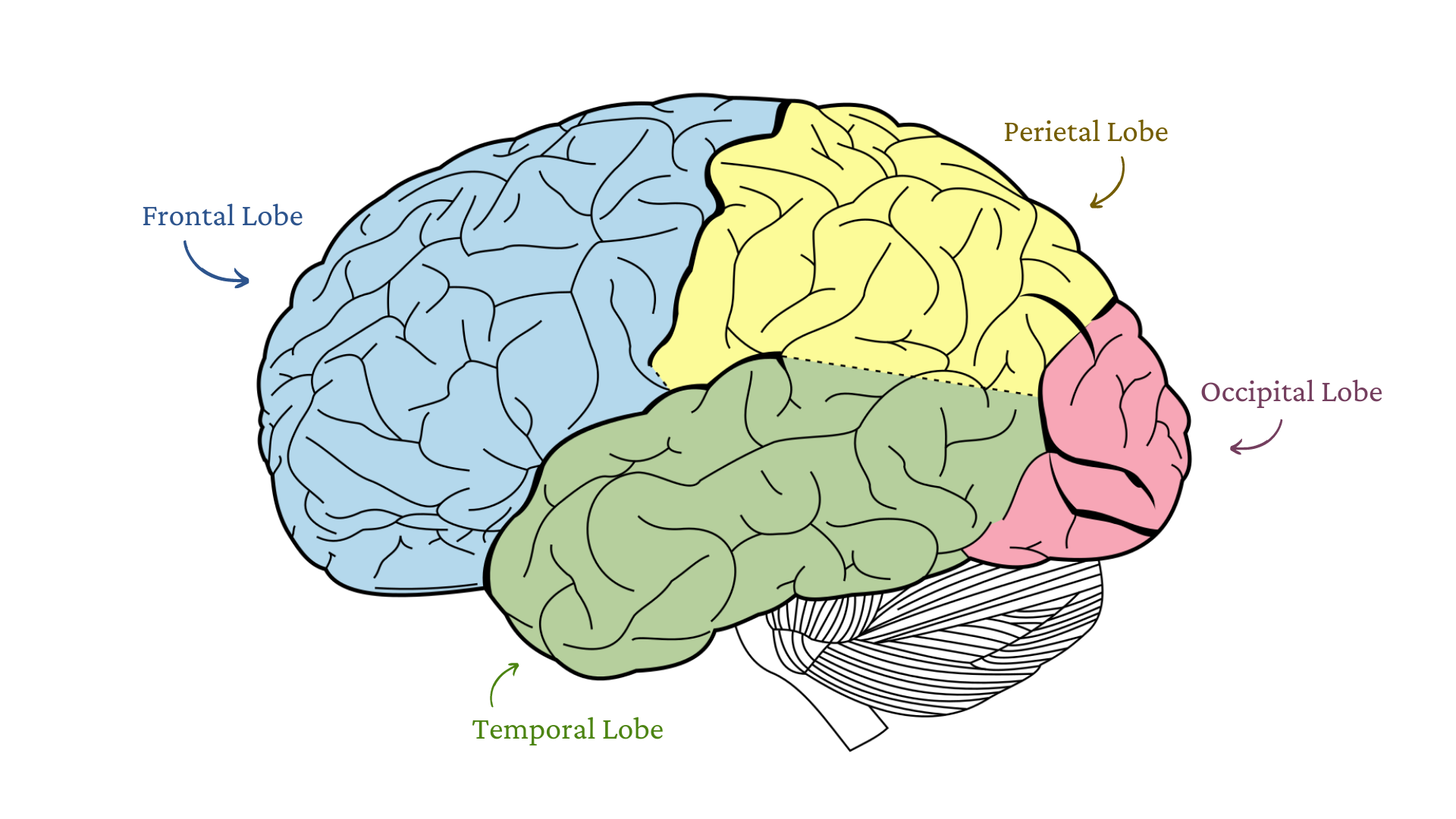

Obviously, while in the womb the fetus hears its mother modeling her native Language. Recent research in child development has shown that specific acoustical aspects of Speech are acquired and developed by the fetus’s Frontal Cortex, which is chiefly responsible for Language development. Progressing to early development in infants to toddlers, more-cognitively syntactic/semantic structures to Language are organized first as a basis for word units of sound (for example, phonemes) and meaning (for example, morphemes), which are then contextualized with greater use. Following that, awareness and proclivities to intonations and articulations from the native tongue are further matched into the infant’s innate and learned meta-linguistic libraries (that is, Lexicon) of language (Pace et al., 2017; Leung et al., 2020).

In utero and at birth, Language output first must have had a genetic basis that facilitates the ease and propensity for further Language intake/imprint and output. In this way, the Brain’s organization and operation of phonemes, morphemes, and other semantically/syntactically supported components of Language, which orchestrate the Mind’s lexicon, are biologically molecular. Therefore, to a murky extent those components are also psychological and perhaps ‘pre-set’ in deterministic fashion.

However, modern epistemology that governs the study of genetics and epigenetics proposes that not all Language output is sourced in such a biochemical-lockstep way, and that it is not as robotically/arbitrarily taken from a preprogramed list of expression cascades. Environment, the nurturing aspect of nature, also agreeably plays a significant role in deciding which Language-related proteins are selected for neurotransmitters, to neural networks, and to lexicon and ontology centers of the Brain (Justice et al., 2018).

LANGUAGE -

Cognitive Capacitance

Undoubtedly Language, even in the most-minimized productions, is scrupulously quite a dutiful and incessant task of the Brain and the Mind within the Cerebrum. It points to infinitesimal subsurface mechanisms of cerebral capacitance for further related and Language-unrelated ability-based intelligences/behaviors. Noteworthy, too, are the paired psychological Temperaments that are more akin to allowing different clarities of cognition that are tendentious to Language. For example, a semblance of atypically skillful forms of linguistic symbol recognition and production, while likely plausible, are in the end not ‘true’ determinants of Language or related competence. Certainly, some features of Language recognition play into memory (for example, Eidetic) and related acuities, but these are often hampered by their inability to show a depth of linguistic processing, production, and adaptability that can creatively anneal to novel/unknown social contexts. Recent psycholinguistics, then, are at the fore of this discussion. It follows that Temperament and associated research involving knowledge of psycholinguistics is paramount to uncovering how discrete individualized Language and Personality are; this discreteness continuously shapes and affects the dynamic cogitation, creativeness, and overall dispatch of Language (Madigan et al., 2019; Tamis-LeMonda et al., 2018).

LANGUAGE -

Genetics of Language

Genes do instruct/encode and produce highly-complex couplings to network-wide cascades of proteins. Downstream, activation of KIAA and FOXP-like transcripts of associated exons affects the biological, thus psychological, propensities for production of Language (for example, Williams Syndrome), but not likely in a direct manner. There is no language gene… yet. The expressly-genetic pathway(s) that interconnect innateness of Language are not fully known at this time. But it’s worth noting that this area of psycholinguistics is still in its infancy along with many other cognitive psychologies. Further keenness of phonemes and morphemes traditionally becomes more apparent with the casual roles and tasks of learning to read, but the incipient mechanisms of Language development burgeon long before the child’s first time saying ‘Mama’ (Brookman et al., 2018).

As Language continues to develop, the pace at which sub-lexical manipulations to rhyming, then semantic-based contexts, are incorporated later begins to reciprocate meaning. Speech perception is largely dependent upon environmental training and stimulation. Stimulation is associated with a person’s Personality. Personality is related to one’s feelings, Emotions, and lexical-symbolic relationships with the meaning and the context that trigger motives to become more or less experientially aware (Justice et al., 2018).

This architecture induces genetic/intercellular prerequisites to Language that are then up/downregulated and potentiated by other genetic factors. These factors are sourced from large amounts and largely different/disparate patterns of genes that do not always result in the expected production of anticipated proteins. Just looking at the parent genes, by the primary activation codons, does not always mean that their progeny-replicated genes/gene factors, for better or for worse, will come out and develop as was intended/initially purposed. Innately baseline (genes/proteins/cognitive faculties that are basically given to all typically functioning newborns), yet nurtured, Language capabilities will also be amplified into differing degrees of Language ability. Therefore, capability and ability here differ. This difference, along with the aforementioned complexity of the neural landscape, exemplifies the high-ordered plasticity of Language (Pace et al., 2017).

In this way, psycholinguistic research points to the claim that, however innate, there is no clear-cut Language gene wherein heritable advantages and/or adaptively advantageous abnormalities are explicitly ‘copied and pasted’ from F1 parent to progeny, at least not yet.

LANGUAGE -

Anatomy of Language Processing

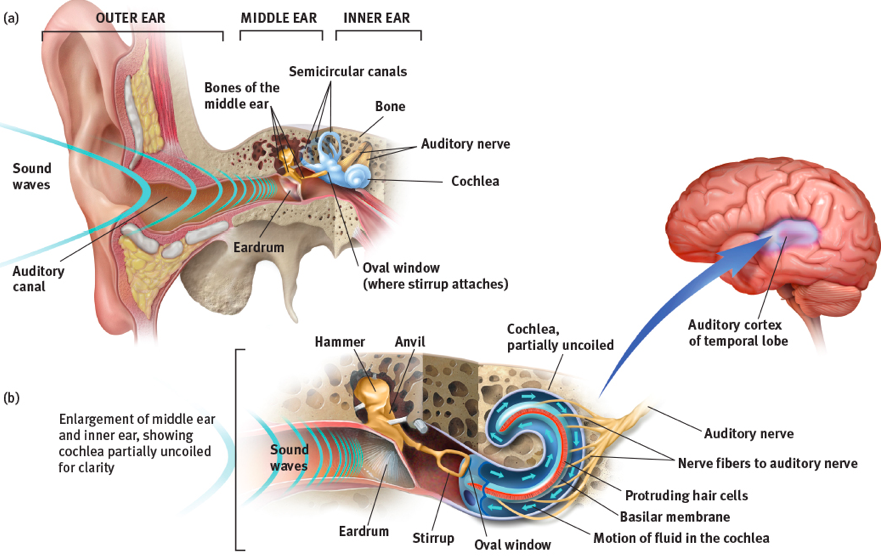

The Auditory System is key to how the Brain and the Mind hear sounds generally and sounds of Language. Further elucidations can provide a unique perspective for where and how Language is processed, and can point to areas of specialization and diagnosable disorders. Sound is all around us, everywhere. Some of it reaches us and is recognized while some of it does not; I’ll explain more about this in a minute. Here, I’ll summarize briefly about sound before going into detail.

Sound waves first hit the ‘Outer Ear.’ These sound waves, in a sinusoidal fashion, are then funneled into the ear canal, which we’ll call the Middle Ear; the end of the canal portion that meets the Eardrum in each ear will be called the start of the Inner Ear. The pairing of the incoming sound and otherworldly sounds, to the excitation of the tiny inner-ear hairs called Cilia, is the ‘first contact’ of the Auditory System of the Mind and the Body with sounds of Mother Nature (Musiek & Baran, 2018; Schwartze & Kotz, 2020). Then, in the Brain, sound-wave frequency and amplitude information collects and is transformed to other parts of the Brain that further dissect and transform it along the way (Musiek & Baran, 2018). Now, let’s use a few forces of Mother Nature to compare and illustrate how, when, and where our ears actually receive and process sounds.

In our Inner Ears, the millions of Cilia are connected to the inner skin walls of our inner canals, leading away from the Eardrum while moving further inward. The Cilia are about to be contacted by Mother Nature. The air outdoors is blown by tidal Energy from gravitational forces between the tug and pull of the Earth and the Moon, which affects the tidal waters of the Oceans. This movement then inadvertently pushes the Air above it. On a side, if you listen closely, you’ll notice that every gust of Wind gives a faint sound like a whistle. The result of this mixture—Air and Energy—is mainly Wind. Wind carries energy. This Energy is transformed into everything and anything it touches and doesn’t touch. The millions of Cilia are like a forest of Trees. The Wind blows the Tree’s leaves, then the tree’s branches, then the trunk, and so on, all the way down the tree. The Wind’s Energy is converted and ‘felt’ by the Tree—not tidally anymore, but rather as Vibrations. Vibrations and the Energy they carry flow from top to bottom, all the way down to the tree’s roots in the ground. Comparatively, the ground is the Cochlea; the ground ‘feels’ or detects the Wind’s Energy. Within Energy exists tiny compartments of energy information called quanta. Energy information that is detected by the ground (or the Cochlea) from the Wind (or Sound) has been converted (or transformed), but the flow of Energy does not stop here. Heavy gusts of Wind cause the ground to move beneath the vibrating Trees; at the same time, the tissue of the human Inner Ear and its Cochlea move, too (Lin et al., 2021).

Now, let’s move with the Sound completely inside the Ear and the Brain. The Cilia have signaled related cation/anion receptors that then carry the Vibrations toward their destination. As the Sound travels inward and further back, from canal to drum, and drum to Inner Ear, the information that it conveys comes in the form of patterned sound frequencies and periods to like electrochemical frequencies and periods. These electrical circuits vibrate three tiny bones in the Middle Ear along the way and use the Auditory Nerve to send organized subcutaneous Impulses of information to deeper cellular networks that first are local to the Ear (Musiek & Baran, 2018). The information is paralleled in both Ears. These networks receive and deconstruct the sounds. The Brain interprets Sound as being electrochemical Impulses so that the meaning of the information, even after being transformed, will not be lost (Schwartze & Kotz, 2020; Key & D'Ambrose Slaboch, 2021). Then, they quickly send those sounds, via the Auditory Nerve, to other areas of the Cerebrum (the Hind Brain, then to the Mid-Brain, then past the Thalamus, past the Acoustic Startle (that is, the Hind Brain and the Primary Auditory Cortex [A1] of the Cerebral Hemisphere), and finally into the Cerebral Hemisphere (Goswami, 2018; Key & D'Ambrose Slaboch, 2021).

The intake and translation of sound information, as is described here, can be related to the sound information-decoding processes in Morse code. The tapping sounds that are heard in Morse code are heard exactly and identically in this same way by these subsurface electrochemical Impulses (except, of course, that Morse sound tapping is translated to chemical tapping). This tapping means nothing until it is further decoded and translated into something meaningful in, at least for this discussion, the Hind Brain so that it will be stored (Lin et al., 2021).

As you can see, Energy information in the Air (Wind and Sound) is converted, transformed, sliced-up, and put back together many times —first outside of the Brain, second by the Ear, and then deep inside by the Hind Brain. The information about every drop of Energy that swirlsaround us —each quanta —is individually interpreted by the Mind, and the Energy within each quanta is unchanged. Energy out and energy in! Some sounds that reach our ears are Language. What happens next to Sounds of Language that have reached the Ears?

From here, the two major Language functions, comprehension and production, of Speech/Language occur in the Left Hemisphere and are dominant for Speech for more than ninety percent of humans. By contrast, the Right Hemisphere plays roles in Speech that are less related to the semantics of Speech and more related to the intonation, stress pattern, loudness variation, pausing, rhythm, and tone of Speech. Now in both hemispheres, music processing (for example, producing, understanding, recognizing, recalling/remembering music) and sound localization (the car that is honking, or the child who is screaming in front of us, behind us, or from left/right of us) occurs (O'Connor, 2018; Lin et al., 2021).

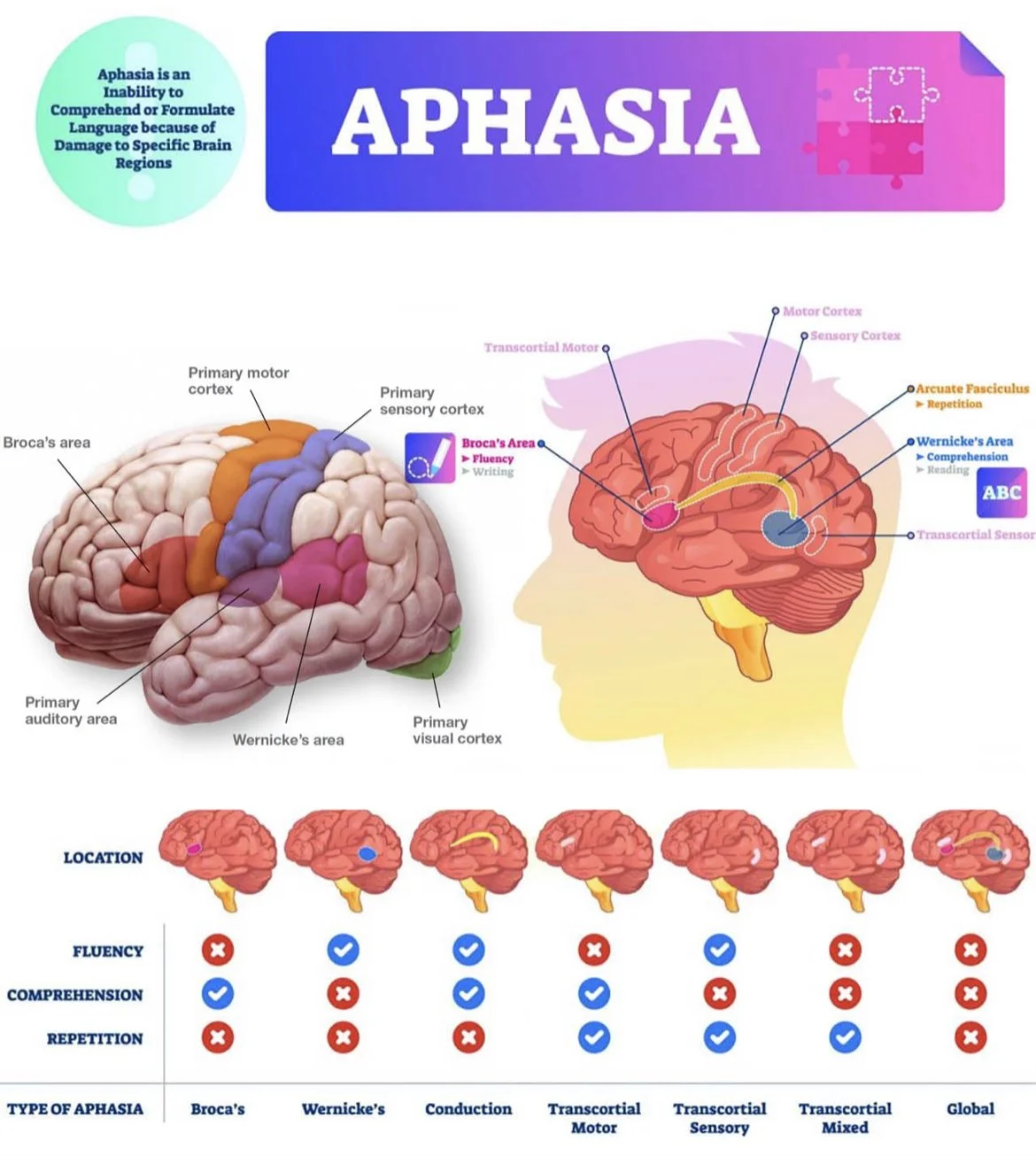

Ventral and dorsal pathways support comprehension and production. The Auditory Cortex (A1) was first correctly proposed by Broca (1860-1865); here, sound into the Left Hemisphere is responsible for Language production. What happens exactly? Information comes into both of the Auditory Cortices, one per hemisphere, and is processed in both places. In the Left Hemisphere, it ends up being processed to the point of allowing semantic understanding; here phonemes, syllables, eventually sentences, and finally the processed sounds are then sent to the Temporal Parietal Junction (TPJ, where the Temporal Lobe meets the Parietal Lobe, which is very close to the Occipital Lobe). We believe that at the TPJ, when an auditory sound is received, a cognitive ‘look-up table/dictionary’ appears that is the lexicon of the Brain for spoken Language and reading. So, information from sight or by feel comes from the Primary Visual Cortex and is sent to the TPJ, where the information is read in (damage to this pathway is Wernicke’s Aphasia) (Musiek & Baran, 2018; Schwartze & Kotz, 2020).

Wernicke’s Aphasia (WA) typically is characterized not only by the difficulty that comes with conceptualizing Speech mentally, but also by the physical underdevelopment that inhibits a human from consistently reproducing intelligible Speech of Language. Obviously, Language and Speech are not the same thing, but that is a moot point, especially in WA. Language is more of a mental construct, whereas Speech that manifests Language comes about by way of coordinated physical mechanisms located mostly in the Mouth itself. The following example comparison will clarify.

During heart surgery, patients are sedated with an anesthesia mask shutting and covering their mouths for the entirety of the procedure. Individuals who are diagnosed with WA are like patients who wake up in the middle of heart surgery; they can hear and understand what is being said to them (Language) and they even have the complete mental faculties to verbalize their thoughts, but their mouths are not connected well to the Language parts of their Brains. In this way, their mental constructs of Language, organized or not, are trapped inside them. They have understanding and Language, but they often cannot speak what they know how to say. Even psych-professionals sometimes misdiagnose and think these patients are ‘slow.’ They are not slow! To me, patients who have Wernicke’s Aphasia are prisoners in their own bodies.

When we know that something is heard and understood (Language) under normal conditions, a response (Speech) is then produced. But how is all that done? Certain areas of the Parietal Lobe are responsible for a whole slew of things that neither we nor Science fully understands or knows how to explain. Generally though, the Parietal Lobe has ‘areas of association.’ These non-specific areas not only house the processing of heterogeneous overlays of visual, auditory, and somatosensory input streams of stimuli; they also function to churn these streams more granularly into meaningful insights for visual cognitive spaces and to facets of memory (Luria, 1973; Luria et al., 2010). However, before this meaningful information can be ‘saved,’ just as this Word document is saved after editing, the information in the Parietal Lobe is assigned, labeled, and addressed for the prospective location where it will be filed and saved—in this case, the Frontal Lobe (Aversi-Ferreira et al., 2019). Likewise, before packages of information-wings within the Parietal Lobe are grown and shipped off to demarcate locations in the Frontal Lobe and selectively to other areas of the Cerebrum, the type of information helps to dictate where the information’s final destination will be. The Speech portions of the Operculum Dorsal areas of the Frontal Lobe, as just one of these potential destinations prospective of the Parietal Lobe, most likely will be ‘most accepting’ of information packages that detail direction-procedures (Friederici et al., 2017).

When those areas in the Frontal Lobe, along with analogously-dependent Speech behaviors, are paired adequately with Broca’s area, they help to facilitate further cognitive development of structure capabilities of Speech (for example, pronunciation, utterance styles, phonemic accent/emphasis changes, and Speech power/pitch/pace) as they relate to its utterance. This process allows a person to produce Language but not necessarily Speech (that is, Broca’s Aphasia) (Aversi-Ferreira et al., 2019). Broca’s Aphasia is more crippling than Wernicke’s Aphasia. In Broca’s, as with Wernicke’s, there is a difficulty to understand (that is, Language) and to communicate (that is, Speech). In Wernicke’s, individuals are foreign residents in their own bodies. But in Broca’s Aphasia, individuals are complete prisoners; prisoners who are handcuffed by their mind’s Speech inabilities not only do not understand and communicate Speech but, more poignantly, do not produce Speech physiologically. They don’t have understanding, Language, or Speech (American Journal of Neuroradiology, 2020).

In Broca’s Aphasia, they experience what we experience when, for example, we step away from the kitchen with our cell phones in our pockets and walk downstairs to our basements. What happens to our cell phone’s service? We quickly notice that our cell signals go from full bars to null, incoming messages are lagged, and sending messages to the outside world is out of the question. In this way, then, those who suffer from Broca’s Aphasia also have mouth muscles that are not ‘hooked-up’ or ‘plugged in’ to the part of their brains (that is, Broca’s area) that is primarily responsible for these cerebral and neuromuscular components of Speech production (Orpella et al., 2017). With Wernicke’s Aphasia, Language ability can come and go, and such changes definitely are difficult to cope with. But with Broca’s Aphasia, ‘all the lights in the house’ and ‘all the gears’ internally could be working well, but where’s a signal or an extension cord when you need it?

Language production is dependent on the Left Hemisphere. Prosody (the study of the metrical structures of verse) is dependent on the Right Hemisphere. Within the Left Hemisphere, the caudal regions of the TPJ are responsible for comprehension, whereas the frontal region is related to production (Friederici et al., 2017). Language improvisations in Speech are where cognitive strategies are used to reduce demands for Speech production. Those strategies are needed because Language production is quite cognitively demanding; thus, there must be ways to reduce that demand in order to make the process more efficient. Pre-formulation is the production of phrases that are used frequently by the individual, and those phrases make up about sixty-five percent of reused phrases; there’s already a set number of ways to produce the chosen words, sentences, paragraphs, et cetera. Insufficient specification of Language is the use of simplified expressions instead of specific examples (for example, ‘Something, something’ or ‘and the like’) (Orpella et al., 2017).

The four levels of Language production are semantic (meaning/intention), syntactic (grammar), morphological (word constructs), and phonological (pronunciation); once articulated into Speech, these levels are essentially responsible for the substance of what is said. We will expound on these levels in a future post.

Language & Speech

Differentiating Language and Speech in early childhood development is keen for best understanding the telltale signs of burgeoning versus deficiency.

Interchangeable or Different?

Language and Speech are not the same thing. To the laymen, Language and Speech may appear to be interchangeable. But in terms of development, they are very different. Simply put, Language is the whole symptom of symbols and words, which includes spoken, written, signed, and gestural communication. For example, a child with a Speech delay may pronounce certain words incorrectly (for example, 'toof' rather than 'tooth'). Speech, on the other hand, refers only to the actual sounds of spoken Language and is an oral form of communication (Hoff, 2019).

LANGUAGE & SPEECH –

Speech

The spoken manifestation of Language is most commonly known today as Speech, but the underlying physiology and associated Speech mechanisms are not. Speech is more than just a bunch of sounds and signals. When put together and uttered, Speech signals and sounds create words, phrases, and sentences, which are then associated with ideas, meanings, and intentions. Sounds and signals typically describe most individuals’ commonly-used modes of Language production in the form of verbal Speech. Other, less-common modes are also as synonymously effective in conveying thoughtful communication. This is true when Sign Language (for example, hand gestures), written symbols (for example, written letters, orthography), and even fire and smoke while camping (for example, meanings of the patterns of smoke are previously assigned) are used to do the same thing as Speech (Collins et al., 2018; McNeill et al., 2018). In order to know the meaning of a signal, symbol or sound, it’s important to remember that accurate reception of the conveyed information is dependent upon already knowing beforehand what that signal means and what idea it’s associated with. In this way each signal must be pre-assigned to a specific idea; this can be difficult to keep straight, for example, with sign language in the deaf community, due to the accessibility of differing Speech modes (Gilkerson et al., 2018).

Verbal speakers have many different, specific, and gray areas in between, with meaning and ideas assigned to a kaleidoscope of sounds; thus, accessibility is very high. The accessibility of verbal Speech differs greatly when it is compared to the disparity of sounds and signals that are found in non-verbal Speech within the deaf community; there, speakers of sign language have access to only a limited array of hand gestures, even when paired with facial effect. Human nature will always tend to favor behavior that is easier and more energy efficient. In this way, the primary mode of mainstream communication for conveying meaningful ideas and information today, instead of using hand gestures, is achieved by the accessibility and energy efficiency of sounds and signals in verbal Speech (Hoff, 2019; Justice et al., 2019).

Linguistic communication, for the most part, is sound-based, where every permutation and combination of sound conveys a different meaning. The order in which sounded units of words, sentences, and ideas are combined together determines phonetic pronunciation, articulation, and eventually compiled meaning and sequence of ideas. This introduction of Speech and linguistic communication helps us to delve further into the realm of Speech sounds and their mechanisms for helping with communicated information. We can produce different sounds that are not Speech sounds or parts of a specific language. For example, I can make a clicking sound with my mouth, which is not part of its Speech sound in English, but is in other languages. Normally-developed humans are all anatomically capable of producing a big set of sounds. Every language seems to have a subset of those sounds that is used as a basis of its phonological system. In this way, all humans are not only biologically programmed to use Speech sounds, but also are psychologically equipped to adapt their sounds to any native tongue of verbal Speech (Gilkerson et al., 2018). No matter which language we’re talking about, the Speech-production mechanism is a universal Language process; all languages use specific and shared sounds, some obviously more than others, to produce verbal communication.

LANGUAGE & SPEECH –

Personality

To break it down even further, Language development consists of two subtypes: expressive and receptive. Expressive Language is the ability to communicate thoughts and feelings. Receptive Language is the understanding of what is being said or communicated. Now that we briefly understand the difference between Language (expressive vs. receptive) and Speech, we wonder how they interact. They are not mutually exclusive. It isn’t ‘nature vs. nurture’ but rather ‘nature through nurture.’ Language (and Speech) can develop only if a child is given opportunity and experience (Justice et al., 2019).

An extreme example would be children who are growing up in a family of members who never speak to them. Even though those children may have the ability to produce sounds, if no one engages in back-and-forth communication with them, they will not develop important muscle structures that support Speech development. Thus, the lack of Language opportunity directly impacts one’s Speech development (Collins et al., 2018).

On a different note, we have an example of speaking children who are growing up with deaf parents who primarily use sign language. Although the children may not be provided early models of Speech development, they are given opportunities for Language if the parents mimic the babies’ facial expressions back to their infants, speak to them through sign, and address their cues appropriately, etc. These children are learning and understanding the rules and regulations of their environment’s Language patterns. These early-childhood experiences with Language and Speech also impact each child’s ability to have an internal monologue, which will be discussed at a later time. Internal monologues (the ability to speak to one’s self in the Mind) typically develop at around six years of age (Justice et al., 2019).

In a future installment of this blog, we will address the following related question: How can children’s linguistic environments (cultures, languages, experiences, opportunities, etc.) influence their respective Personalities (temperaments, moods, intentions)?

LANGUAGE & SPEECH -

Language, Speech, and Emotion

Thinking of Language, Speech, and Emotion prompts some of us to ask two questions:

1. How do these three key contributors help to shape one’s Personality?

2. How can we shape individual Personality through Language and Speech?

Soon, we will delve into answering these questions while learning more about relationships and interplays among Language, Speech, Emotion, and Personality. Meanwhile, let’s begin to learn about the development, types, disorders, and more concerning Personality.

Introduction to Personality

The compilation of Temperament, Mood, and Intentionality today is more commonly referred to as Personality; hence, as we move forward, it will be addressed as such. Personality is the coherent patterning of inter-cognitive procedure states, perceptions/memories, and related experiential states of mind. Those states of mind influence and thus support resultant, yet fluctuating and connected, emotions and behavior states that are linked to relationships specific to those states of mind. We exhibit many different emotions and behaviors and many different states of self within each thought or behavior, not just those that are displayed. In this way and in all of us, a huge and diverse ‘village of selves’ is integrated into our every behavior, beyond the myriads of behaviors that remain in thought only (Johar, 2016; Collins et al., 2018).

INTRO TO PERSONALITY -

Characteristics, Cognition, and Behavior

Temperament can take on obscure and elusive forms. A helpful way to illustrate the dynamic ebbs and flows of its complexities is to liken it to meteorological workings of everyday weather. Personality also has been described as being a higher-ordered process of thought that gives rise to consciousness; at the same time, it affects and is affected by actuated behavior that, in a reflexively downstream manner, develops associated temperament traits and characteristics. A wide gambit of cognitive intelligence is still widely democratized as being beneficent to self and the world over, and thus is seemingly acclaimed as being holistically advantageous (Collins et al., 2018; Schuller & Batliner, 2013).

Simultaneously, the experiential context of temperament—as it relates to our situational appraisal and to decision-prompted response(s), coupled with motive—creates an impressively wide array of selectable characteristics to choose from. Delineating and differentiating all possible scenarios linked to temperamental traits and characteristics is one of many factors involved in Personality assessment. This task makes it one of the most difficult of all cognitive inadequacies to identify accurately, let alone to diagnose and treat. Even only on the basis of decisional rationale and irrationality, assaying Personality is an imponderably incalculable feat while in the blink of an eye Personality occurs fluidly and completely independently of our say-so (Schuller & Batliner, 2013; Wiggins, 1996).

Diagnostically, Personality simply describes the way in which we feel and think; based on environmental constraints and heritable aspects of character, it influences our thoughts, decisions and behaviors. We certainly have free will and the perceived freedom to act as we please; at the same time, this evaluation really is a demarcation over simplification. So as long as our acted-upon Personality traits of thinking and behaving prevent our behaviors from straying away from what is considered to be socially and culturally expected, then our Personality is acceptable, adaptive and functional (Wiggins, 1996).

Problems arise quickly when the converse and deviation of our acceptable behaviors become irresolutely disorganized and unexpectedly maladaptive. Personality Disorders (PDs) affect the ways in which we perceive ourselves and others, relate and respond to others emotionally, and dictate and redirect behavioral spontaneity that is productive to self. A thorough discussion of PDs in a later blog post will cover particular topics (American Psychological Association, 2021).

While Personality disorders are traditionally inflexible and repeatedly impairing, they often can lead to increasingly damaging interpersonal problems because they’re also socio-culturally unacceptable. PDs and communicatively insidious traits of temperament are usually overlapping and/or shared; thus, symptomologies are comorbid with other major psychiatric conditions, as noted in the DSM-V. The different modes in which Personality manifests itself then is worth considering and will be discussed later, along with identifying the different subgroupings or clusters that PDs are discretely divided into (American Psychological Association, 2021).

Tranquil winds eventually come and go and soothe along the way, but those that gust and trample are usually the beginnings of a storm that shakes and booms with even violent scurries of thunder, rain, sleet, or snow. Analogously, we can think of temperament in the same way; the increasing winds of emotional, unstable, even destructive traits of temperament generally feed the persistence of the storm’s Personality. In tune with this example of hyper-sentimentalism, which turns into maladaptive Personality symptomology, these emotional traits (impulsivity, anxiety, etc.) inhibit productive cognitions and socially normative behaviors (Schuller & Batliner, 2013; Wiggins, 1996).

INTRO TO PERSONALITY -

Borderline Disorders and Comorbid Misdiagnosis

Differentiating the core substance of anything is challenging at best, but uncovering all active and influential substituents can make diagnosing more advantageous for all prospective parties involved. Diagnostic discrepancies aside, misdiagnosis of temperament and mood disorders occurs too often in cognitive psychology and comparatively in clinical psychology. The latter can be seen in diagnosing PDs and while they at times insidiously integrate and intertwine comorbidities with other typical to unspecified psychological disorders. Clinical assessment of borderline Personality disorder, then, has indeed paved the way for managing patients’ categorically distinct PDs; but, at what cost to a plausible misdiagnosis in associated trait disorders? (American Psychological Association, 2021)

At the heart of this modern-day inquiry is the understanding of all Personality contributors and storied origins. Definable constructs of Personality are well known to have taken firm root in an interactionalist joint approach to Personality and social behavior in the middle of the twentieth century in Western Europe before migrating into the North Americas. Constructs of Personality were defined to be a cognitively individualistic set of specific theories and beliefs that were intrinsic to one's self-apperception and, hence, to perceived reality of others and the surrounding world (Wiggins, 1996).

Before we can continue effectively into further detailed elaborations regarding the many issues of Personality, we should first step back and expound on the psychological basis for Personality and how this basis relates to Personality disorder. In a follow-on blog post soon, we will do just that. We’ll also examine the heritability, epigenetics, and associated traits of PDs that are both closely reliant upon and dynamically fluid among the most-common borderline Personality disorders.

Cerebral, Emotional Intelligence (EI) and Personality

Professional, scholastic, and other worldly dimensions of success support reciprocated yet elevated levels of cognitive function that undergird the irrefutable purpose of neuropsychological inventories.

Cognitive Aptitude

As far as we know, upon evidence of cognitive change due to neural damage and/or cerebral degradation, methods of neuropsychological analysis are an appropriate refinement of clinical-neurological observation that are set in place to enhance/facilitate neurological treatment (Alghamdi et al., 2017; Sanchez-Alvarez et al., 2020).

In the midst of this knowledge, it’s important to be cognizant of other measures of aptitude that are as likely, as equally integral, and as influential, if not superseding primary, to the lone acclaim of ‘brute brains.’ Areas of evidence-based behavioral and neuropsychological research point to the bludgeoned nature-vs.-nurturing type of debates that cover perceptual interactions that are propounded to tease apart the contextual clarity of cognitive capability that subsumes the epigenetic loci of cognitive fluidity, let alone modes of memory retrieval. (Loci is a genetic term meaning a specific spot or location, usually on a chromosome.) Analogous pragmatic inquiries seek to elucidate upon the ‘heartbeat’/beginning-moment mechanisms of Personality that still appear to persist within the mysterious ether-like subsistence of Emotion that ratifies the etiology of a multifarious fabric of Personality (mood, temperament, intentionality) (Denny et al., 2019; Sanchez-Alvarez et al., 2020).

CEREBRAL, EI & PERSONALITY -

Emotional Effectivity on Cerebral Processing

Recently-associated mixed burgeonings of psychological inquest involving emotional effectivity on cerebral processing, as well as novel appraisals of experience-based upticks in neural dendritic enhancements, are well acclaimed; yet, their lack of empirical substance belies modern neuropsychological elaboration/confirmation of everyday psychological performance (Collins et al., 2018; Sanchez-Alvarez et al., 2020). As the expediency of Intelligence aims to measure and compare, it divergently lags to predict the kaleidoscopically mutable spectral hues of Personality and, likewise, how such features relate to elevated levels of creative and/or critical thought. Even so, and again, intellectually elevated capability and ability do not seem, for better or for worse, to act alone (Sanchez-Alvarez et al., 2020; Suleman et al., 2019).

CEREBRAL, EI & PERSONALITY -

Beginnings of Emotion, Personality, and Intelligence

The early beginnings of nearly all terrestrial forms of life start in similar ways, even concerning humans with their usual ten fingers and ten toes. Obviously, what happens right before birth and after zygote fertilization, with an emphasis on biological function, is well chronicled. On the other hand, what happens in utero, from a psychological perspective, is quite different (Pishghadam et al., 2020).

Did Emotion come first, or did the theory of mind (ToM) precede it? Perhaps both developed simultaneously, but even if they did, such a fact doesn’t help us to know which, either Emotion or Personality, is responsible for explaining how we perceive and act/react with and within the many different degrees of a personality-governed-emotion that is central to psychological subsistence. At this juncture we may be tempted to reflect overly on how and where to begin such lines of question; however, it’s important to note that by now the majority of the ‘hard work’ has already been completed. We may not know exactly where or how to start, but we do know that the end and the start together are in actuality an alternative yet feasible starting point that turns into a game of ‘closing the gap’ (Suleman et al., 2019).

For example, when assembling a bike from scratch, let’s assume that the actual metal frame of the bike is already soldered and structurally pre-assembled, and so from there we add the handles and wheels to the frame of the bike. After assembly is complete, we take the bicycle for a ride and while testing it we notice that the frame bends and vibrates. In a backwards way we can make assumptions about the types of metal and solder that were used in the pre-assembly phase instead of, at the outset of our assembly (before adding the handle bar and the wheels to the bike frame), analyzing and chemically testing the strength of the best kind of metal that rightly supports a bike’s structural soundness.

Does this make sense? We know how the bike (brain development) starts (zygote/sperm fertilization) and how it ends (45 weeks/three-term birth); that is enough information to ‘close the gap’ in figuring out what and how different components of the pre-assembly phase (in-utero) affect(s/ed) the assembly and the test drive of the finished product (Alghamdi et al., 2017).

CEREBRAL, EI & PERSONALITY -

Emotion

Emotion is a transient state of internal feeling, of the Body and the Mind, whose feelings are inhibited, excited and/or changed, upon the exchange (for example, input and output) of stimulus processing and appraisal. Invasive recordings of the Thalamus, the Motor Cortex, and the Basal Ganglia produce important insights into the neuropsychological mechanism of Emotion by tracking biometric and ocular changes that readily correspond with autonomic and voluntary artifacts of our psychophysiological states. Emotions occur consciously and subconsciously (Pishghadam et al., 2020; Suleman et al., 2019).

When we’re triggered by something upsetting, we likely experience (usually subconsciously) a form of anger, no matter how intense. Here, we say that we have experienced Emotion. A good example of an emotional reaction can occur while we’re driving. Let’s say that we’re on our way to drop off our child at school. Suddenly, we’re unexpectedly cut off by a nearby person who’s also taking selfies while driving. In reaction, we likely instantly slam on our brakes and/or do whatever is needed to avoid that person’s car. In the milliseconds that follow this reaction, we consciously experience Emotion, also likely in the form of anger. In both scenarios it is safe to say that simple, everyday anthropometrics (for example, HR, BP, EKG) would increase in a way that mirrors the function of associated vital organs, and with the emphasis of hormone-impulsive reactions (for example, stress). In its most-fundamental form, exertion of Emotion, among other things and whether subconsciously and/or consciously, takes on a cellular burden of debt in the form of cellular energy. This energy cannot be metabolized nor churned through the electron-transport chain (EC) without available and free-floating oxygen that is most commonly found in our blood. Emotion-selective neurons in the Occipital-Temporal visual cortices to the medial/ventral Frontal Cortex also require such oxygen for processing input-visual stimuli so that those stimuli, too, may be evaluated and categorized (Collins et al., 2018; Suleman et al., 2019).

Furthering this, functional imaging (for example, fMRI) does advance our understanding of Emotion and the physiological processes within; however, it is quite limited when zooming into cellular to sub-cellular levels because we don’t know the emotion of an atom, let alone of a cell (Alghamdi et al., 2017; Pishghadam et al., 2020).

CEREBRAL, EI & PERSONALITY -

Intelligence

These last two major sections of this particular blog post to date clearly have singled out and addressed various facets of Personality and Emotion, to a certain extent. The relationship, involvement, and basic influence of Intelligence have been mentioned but not yet covered in detail; such detail will be presented in a future post to this blog (Alghamdi et al., 2017; Sanchez-Alvarez et al., 2020).

Personality is derived, formed, and developed, and it lives in our entire being. If I had to place it somewhere that we humans can compare with, I’d say that Personality exists in a fondu pot of consciousness that we observably perceive as being our reality. The citrus fruit that we first dip into the chocolate fondu is small, but it is influenced by many different qualities of the chocolate. Is the chocolate thin and flowing downward like a syrup? Or, is it thick like a proper fondu? Is it dark, or is it light? Is it sticky, or not? Is it from cocoa beans (that is, real), or is it synthetic (for example, hallucination or delusion)? As the citrus fruit spends more time in the chocolate fondu, and as we keep dipping it in and out of the chocolate, the delicious fruit grows larger and larger. The layers around the fruit increase, similarly to the layers of a growing onion. In other words, I imagine Personality living within a pool of something that is much larger, more fluid, and farther reaching than just itself. Yes, consciousness is there, too, but that’s a lengthy topic for another day (Pishghadam et al., 2020; Sanchez-Alvarez et al., 2020).

Summary

Language is an awesome power and a gift! It is more than just polysyllabic sparks. Language shapes, relates, associates, and sometimes even complicates our everyday experiences, but definitely not without purpose or hope. The capacities of Language are categorically heterogeneous, yet multifariously overlapping. Coupled with Personality and Emotional Intelligence ‘like-spheres’ to effectuate how we think, feel, and behave, those capacities are undeniably human to our mental states of selfhood.

Language and Personality govern each other’s internal mechanisms more than we had supposed. Language does come first. Then, quickly, Personality takes over to include the relationship and interplay of Emotion and Intelligence, influences and behaviors. No doubt, such unification impinges on the weighted meaningfulness and value of relationships that are spawned and made active in each other sphere; these spheres then act together in substantiating prospective states of mind. Clearly, Language was, and remains, meant to be used as a vehicle for our internal voice, for which our innermost expressions are vitally based on feeling.

Language couples extemporaneously with Personality and Emotional Intelligence. We don’t know where or how Language is acquired, filtered and further purposed, let alone about its early developmental growth and vitally-communicative capacities. Therefore, we fail to understand what makes us human and we neglect individual differences. In this writing we’re also helped to see how unifications of Language richly transition subconscious to conscious awareness and experience of self that likewise shapes our perceptions of feelings, thoughts, and intentions.

Last, doing some mental time travel of our own gives us opportunity and help to appreciate our children’s earliest Language developments so that we may not only rediscover what we learned as young children but also learn the enduring effects that these lessons have had on us.

Good parenting requires nurture and, with it, education and openness to accept psychologies that may be hard to hear upon diagnosis but usually can be understood and treated. What is first set up, however, is always subject to change; psychological diagnosis should never replace the physical nor cognitive individuality of who a person is and/or wants to be.

© 2022 Christopher Schroeder of New World Psychology, LLC.

Past Comments:

“I always new language was so complex but never thought about it in terms of it being related to personality. Thoroughly enjoyed this one.”

—Daren K. (May 2023)